Is DeepSeek Popping the AI Bubble?

It might be different from what the mainstream medias are saying ...

Nvidia lost about 17% or close to $593 billion in market value.

- Reuters

Chinese DeepSeek wipes off $1 trillion from US tech giants in a day.

- Times of India

These shocking titles broke my inbox on Monday, 28 Jan.

US stocks were down, Crypto markets were plummeting.

What on earth is happening?

The Disruptor

A new large language model (LLM), R1, was introduced by DeepSeek, a Chinese AI company on 20th January.

In recent days, the AI Assistant application built using this model has been the No. 1 app on the Apple App Store, and ChatGPT is now the second.

It’s Good

Unlike traditional LLMs, R1 emphasizes autonomous reasoning through reinforcement learning (RL) and supervised fine-tuning (SFT), enabling it to outperform GPT-4 in benchmarks like MMLU.

You can think of MMLU as an AI exam that covers multiple topics, just like your college coursework. Instead of testing one area (like just math or history), MMLU tests AI models on 57 subjects, ranging from STEM, humanities, social sciences, and more. R1 scored 90.8%, while GPT-4 scored 86.4% (A human colleague student average is ~ 89%).

If the above sounds fuzzy, all you need to know is that R1 is an excellent model and a good contender to all the existing models.

But isn’t it just another LLM? Why is it breaking the internet?

It’s Open-source and Cheap

R1 model‘s open-source MIT license and 128K-token context window made it an instant hit, topping app stores and sparking a developer frenzy.

When we say a model is open-source, it means that anyone can access, use, modify, and distribute its code and model weights (the actual trained AI brain) without needing permission from a company or organization.

If you are worrying DeepSeek AI assistant application is going to send your data across to the Chinese government, you can just download the model locally and run your own chatbot. I am organizing the content of running this model locally with Ollama and will share it soon.

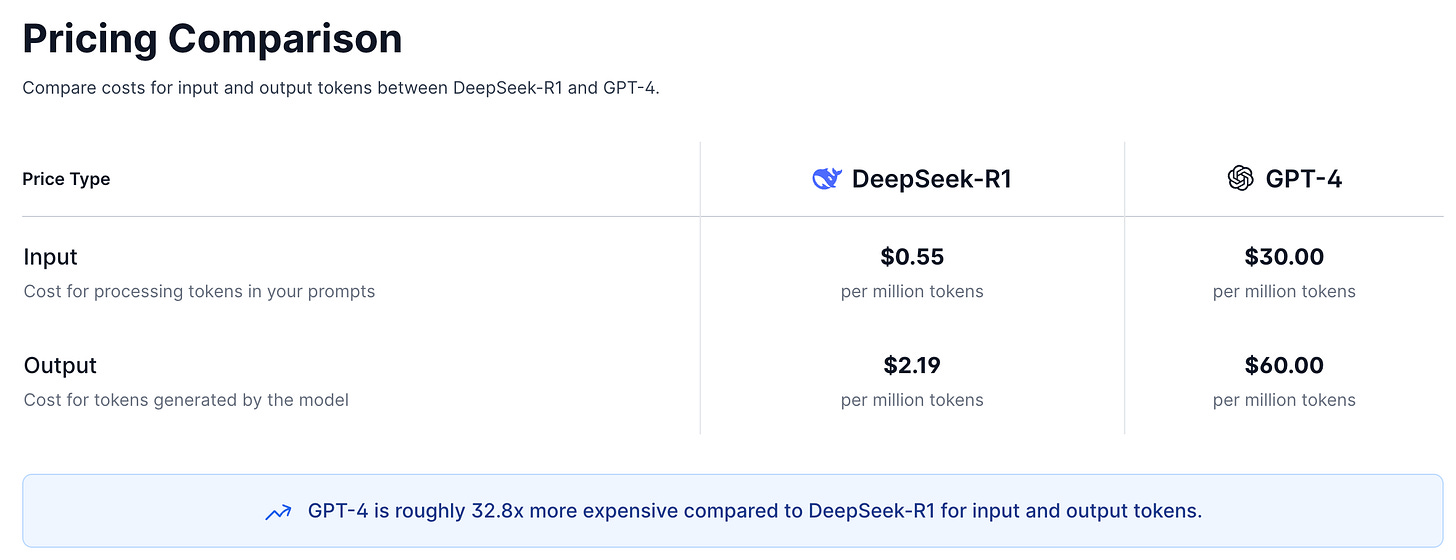

The following diagram from docsbot.ai shows how much cheaper the R1 model is compared to OpenAI:

Read that line in blue: GPT-4 is 32.8x more expensive than R1 if developers / companies want to use them.

If you are a subscriber to the ChatGPT Plus tier, you pay $20 per month for the good reasoning model. The app and the web version of DeepSeek AI assistant are free. This is one of the reasons that this app could top the Apple App Store in such a short time.

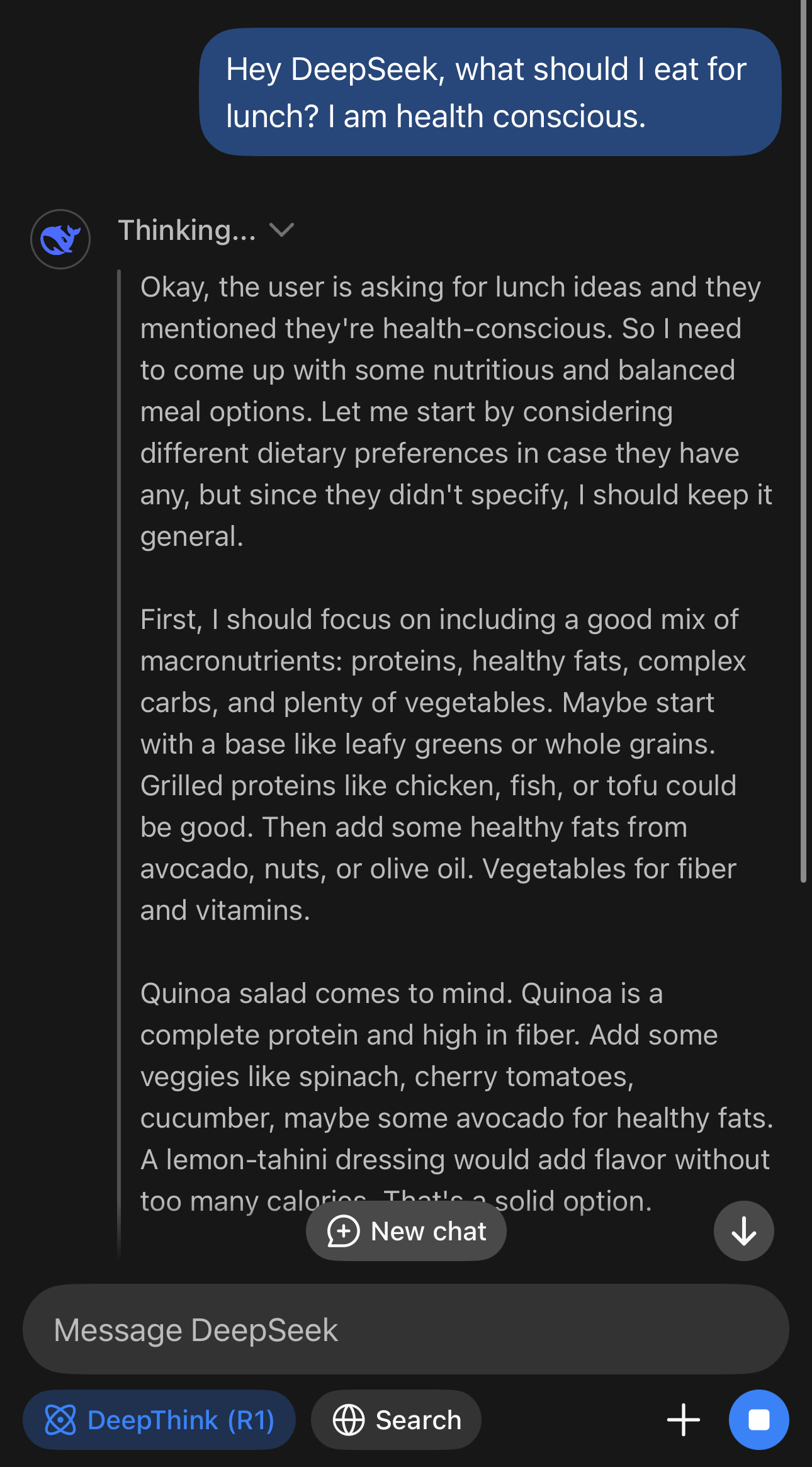

Side note: It is so enjoyable seeing the visualization of R1’s thought process before answering your question.

But How Can It Be So Cheap?

DeepSeek trained R1 for under $6 million in just two months—a stark contrast to the billions spent by U.S. firms. These are some of the reasons why it could achieve such a low cost (NBC News has a great article explaining this more technically):

Mixture of Expert (MoE)

The large model was divided into submodels which have their expertise. Each submodel is activated only when its particular knowledge is relevant and makes the model much more effective.

Hardware Tweak

While DeepSeek could only access to Nvidia H800 GPU (which is less advanced) due to U.S. export bans on advanced chips, it was able to minimize the computational power requirements of those chips by customizing the communication scheme between chips, etc improve the efficiency.

Mixed Precision Framework

DeepSeek combines full-precision and low-precision floating point numbers, which results in a balance between accuracy and efficiency.

How Is This Related to The Stock Price of U.S. Chips Manufacturers?

The release of the R1 model has challenged key assumptions about the AI industry, particularly regarding cost efficiency on Nvidia’s high-end GPUs. It outperformed leading models, while only spending a fraction of others’ training costs.

Are multibillion-dollar investments in AI infrastructure (heavily based on Nvidia GPUs) necessary or if similar results can be achieved with far lower costs?

My Thoughts

While it might affect short-term market movements and emotions, I believe the breakthrough of the R1 model from DeepSeek would be very beneficial for AI development.

We are just at the beginning of the AI era, and its application is far beyond our expectations. Having competition is great for the consumers, and other AI companies would have known that more things could be optimized than they imagined by now. Besides, we also couldn’t imagine the amount of computing power we need to power the future world from the current point of view.

"I think there is a world market for maybe five computers".

- Thomas J. Watson, the president of IBM in 1943

I am not a financial advisor, nor I am telling you to catch the falling knife of the 17% drop in Nvidia stock. However, I am optimistic to see AI development speeding up after this breakthrough even further.

Over the coming weeks, expect a variety of content, including high-level knowledge bases and step-by-step guides about AI, robotics, self-driving cars, and other emerging technologies.

If this catches your interest, subscribe to “The Semi-Expert” newsletter to receive my weekly learnings directly in your inbox. I’ll keep you company on this journey as we adapt to these exciting technologies together.